Learn about Databricks Delta Lake, Azure delta lake

What is Databricks Delta lake?

Databricks Delta lake is an open-source project that enables building a Lakehouse Architecture on top of existing storage systems such as S3, ADLS, GCS, and HDFS..

Delta Lake is a new standard for building data lakes. It has the following key features

| Feature | Description |

|---|---|

| ACID Transactions | Prior to Delta Lake and the addition of transactions, data engineers had to go through a manual error prone process to ensure data integrity. Delta Lake brings familiar ACID transactions to data lakes. It provides serializability, the strongest level of isolation level. |

| Scalable Metadata Handling | Delta Lake treats metadata just like data, leveraging Spark’s distributed processing power to handle all its metadata. As a result, Delta Lake can handle petabyte-scale tables with billions of partitions and files at ease. |

| Time Travel (data versioning) | Delta Lake provides snapshots of data enabling you to revert to earlier versions of data for audits, rollbacks or to reproduce experiments. |

| Open Format | Apache Parquet is the baseline format for Delta Lake, enabling you to leverage the efficient compression and encoding schemes that are native to the format. |

| Unified Batch and Streaming Source and Sink | A table in Delta Lake is both a batch table, as well as a streaming source and sink. Streaming data ingest, batch historic backfill, and interactive queries all just work out of the box. |

| Schema Enforcement | Schema enforcement helps ensure that the data types are correct and required columns are present, preventing bad data from causing data inconsistency. |

| Schema Evolution | Delta Lake enables you to make changes to a table schema that can be applied automatically, without having to write migration DDL. |

| Audit History | Delta Lake transaction log records details about every change made to data providing a full audit trail of the changes. |

| Updates and Deletes | Delta Lake supports Scala / Java / Python and SQL APIs for a variety of functionality. Support for merge, update, and delete operations helps you to meet compliance requirements. |

| 100% Compatible with Apache Spark API | Developers can use Delta Lake with their existing data pipelines with minimal change as it is fully compatible with existing Spark implementations. |

Why Databricks Delta lake?

Before Databricks Delta lake, Data lakes have the following gaps

- Hard to append data

- Modification of existing data difficult

- Jobs failing mid way

- Real-time operations hard

- Costly to keep historical data versions

- Difficult to handle large metadata

- “Too many files” problems

To address the above gaps, Databricks Delta lake provides you the following capabilities

- ACID Transactions

- It will ensure every operation is transactional.

- It either fully succeeds or it is fully aborted for later retries

- Spark under the hood

- Spark is built for handling large amounts of data

- All Delta Lake metadata stored in open Parquet format

- Portions of it cached and optimized for fast access

- Data and it’s metadata always co-exist

- Indexing

- Automatically optimize a layout that enables fast access.

- Provides Partitioning, Data skipping, Z-ordering.

- Schema Validation and evolution

- All data in Delta Tables have to adhere to a strict schema.

- Includes schema evolution in merge operations

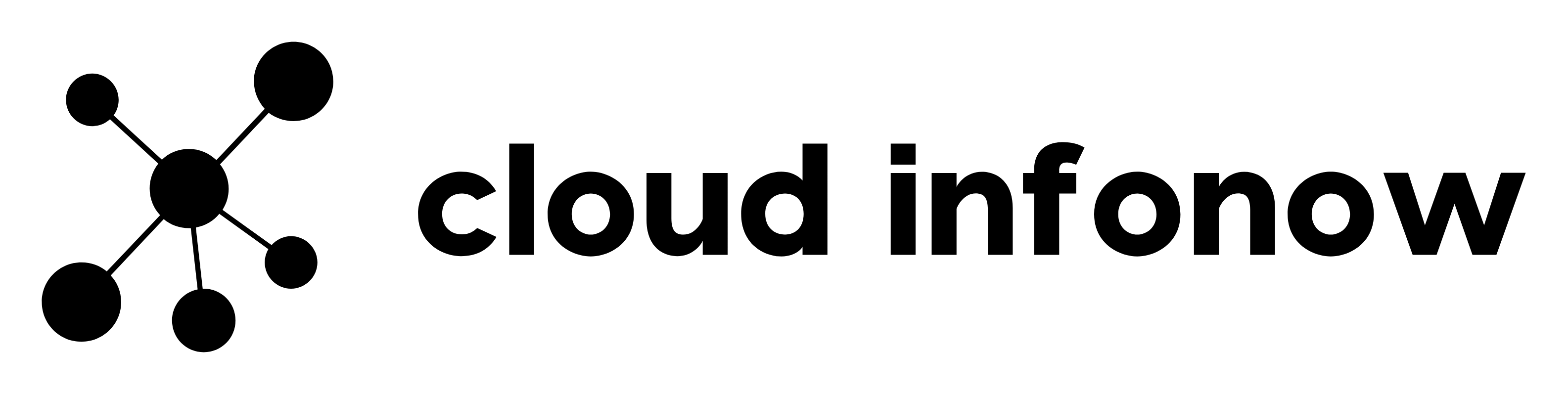

Delta lake Architecture

As shown in above architecture diagram, Once data lands into an open data lake, Delta Lake is used to prepare that data for data engineering, business intelligence, SQL analytics, data science, and machine learning use-cases.

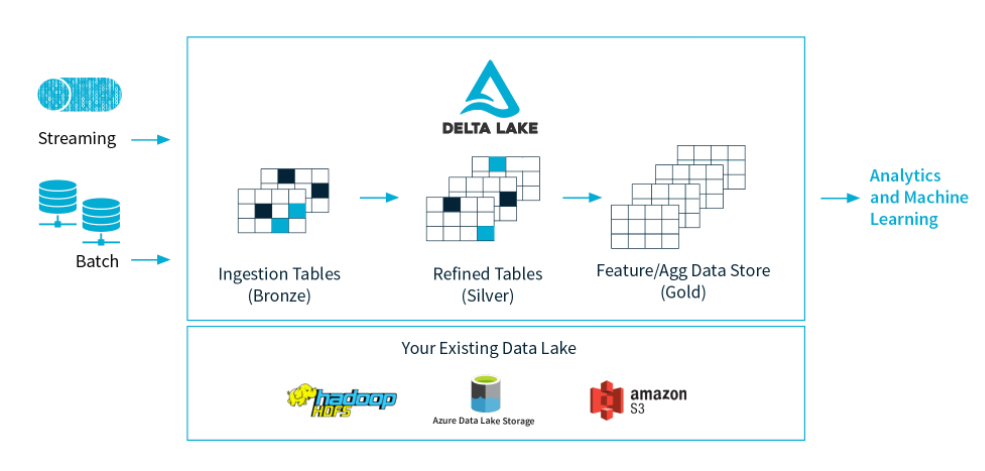

Components of Databricks Delta lake

Following are the Components of Delta lake

Azure Delta lake

Azure Delta lake has all the above features, concepts. It is similar to Databricks Delta lake , except that it was packaged for Azure Cloud environment.

Additional resources can be found in

Databricks Documentation – https://docs.databricks.com/delta/index.html

Azure Databricks Documentation – https://docs.microsoft.com/en-us/azure/databricks/delta/